Ich dachte, ich hätte es hier gelesen. Ich ziehe jetzt eh um, aber danke für den Tipp.

EDIT: Ich hab folgendes gefunden und das funktioniert, danke an alle und danke an den Verfasser!

Für die Leute, die keine offene Browser Session haben, hier ein kleines, aber funktionales Bash Script, welches im Ausführungsverzeichnis eine myFedditUserData.json erstellt, welche bei anderen Instanzen importiert werden kann.

Anforderungen:

- Linux/Mac OS X Installation

- jq installiert (Unter Ubuntu/Debian/Mint z.B. per

sudo apt install -y jq

Anleitung:

- Folgendes Script unter einem beliebigen Namen mit

.shEndung abspeichern, z.B.getMyFedditUsserData.sh - Script in beliebigen Textprogramm öffnen, Username/Mail und Passwort ausfüllen (optional Instanz ändern)

- Terminal im Ordner des Scripts öffnen und

chmod +x getMyFedditUsserData.shausführen (Namen eventuell anpassen) - ./getMyFedditUsserData.sh

- Nun liegt im Ordner neben dem Script eine frische

myFedditUserData.json

Anmerkung: Das Script ist recht simpel, es wird ein JWT Bearer Token angefragt und als Header bei dem GET Aufruf von https://feddit.de/api/v3/user/export_settings mitgegeben. Wer kein Linux/Mac OS X zur Verfügung hat, kann den Ablauf mit anderen Mitteln nachstellen.

Das Script:

undefined

#!/bin/bash

# Basic login script for Lemmy API

# CHANGE THESE VALUES

my_instance="https://feddit.de" # e.g. https://feddit.nl

my_username="" # e.g. freamon

my_password="" # e.g. hunter2

########################################################

# Lemmy API version

API="api/v3"

########################################################

# Turn off history substitution (avoid errors with ! usage)

set +H

########################################################

# Login

login() {

end_point="user/login"

json_data="{\"username_or_email\":\"$my_username\",\"password\":\"$my_password\"}"

url="$my_instance/$API/$end_point"

curl -H "Content-Type: application/json" -d "$json_data" "$url"

}

# Get userdata as JSON

getUserData() {

end_point="user/export_settings"

url="$my_instance/$API/$end_point"

curl -H "Authorization: Bearer ${JWT}" "$url"

}

JWT=$(login | jq -r '.jwt')

printf 'JWT Token: %s\n' "$JWT"

getUserData | jq > myFedditUserData.json

Feddit Daten Export

Moin. Ich hatte vor einiger Zeit mal etwas gelesen, von einer Möglichkeit des Dazenexports von feddit.de zwecks Umzug. Ich kann den Beitrag aber nicht mehr finden, weil das Auto-Verstecken ihn nicht mehr rausrückt. Kann mich jemand davor retten, nochmal 100 zu abonnieren? Danke!

Und der besondere Spaß ist, dass sie dann, wenn sie abgelaufen sind, Hautkrebs verursachen können.

Ich hab es gedacht, aber war mir sicher, dass es was anderes sein MUSS. So irrt man sich.

Was zum Fick ist Tzabatta?

How would that work? I couldn't find it in that post.

I agree, but most games also have a higher ratio of value to cost. If I buy a game for 50 bucks, I'll play it for many hours, let's say 50. So that will be 1 per hour, pretty good. If I buy a new movie, that isn't available for subscription streaming, that ratio is easily double. If I have a subscription and need another now, that also lowers it's value. It also comes with lower comfort and ease of consumption, as you mentioned.

Another great example is YouTube premium. I'll gladly pay 5 or 7 bucks for adfree content, not 14 though. I don't need YouTube music. So I block ads where I can and donate to creators, if I can afford it. They could have had my money, but they are, simply, greedy.

I also hate it, when deals are altered without my consent. It makes me feel like a sucker, and therefore makes it less likely for me to keep investing.

Because often enough, results in science contradict religious belief. Heliocentric model, for example.

You most likely won't utilize these speeds in a home lab, but I understand why you want them. I do too. I settled for 2.5GBit because that was a sweet spot in terms of speed, cost and power draw. In total, I idle at about 60W for following systems:

- Lenovo M90q (i7 10700, 32GB, 3 x 1 TB SSD) running Proxmox, 15W idle

- Custom NAS (Ryzen 2400G, 16GB, 4x12TB HDD)v running Truenas (30W idle)

- Firewall (N5105, 8GB) running OPNsense (8W idle)

- FritzBox 6660 Cable, which functions as a glorified access point, 10W idle

Weird, isn't it. A lot of those successful services have cute little mascots. It influences me more than it should.

I know exactly what you mean. I'd also prefer Debian, Mint or Fedora. Each has its weaknesses, but you got to start somewhere. Go for it, then decide for yourself. It's not that hard to switch again.

Ich nehme an, dass hier physische Hardware vermietet wird. Häufig ist es aber günstiger, virtuelle Hardware (z.B. CPU Kerne) zu nutzen - man teilt sich dann die CPU mit anderen, während bei ersterem nur du darauf Zugriff hast. Ist vereinfacht, aber so mehr oder weniger...

I'd be very careful to publicly host Jellyfin. Although not necessarily true, it basically advertises that you're pirating content while also giving out your IP. Even if you rip your own media, this can still be illegal. Please be careful.

Maybe you can put it behind some authentication or, even better, a VPN.

Was für ein Abschaum!

Besten Dank dir auch für den Tipp, hab ich bereits und ein Veränderungsantrag ist am laufen. Ich musste den auch durch Widerspruch erlangen und dann gab es statt 30 dann 40. Die Dame vom Sozialverband meinte dann, ich könne zwar klagen, aber das dauert 2 Jahre. Also hab ich gewartet und es nun nochmal versucht.

Dir nur das Beste, ist immer schön und entlasten, von anderen zu hören!

Arbeitest du bei der Heute Show oder im Krankenhaus?

Es wäre auch mal schön, wenn man eine gewisse Menschlichkeit in diesen System finden könnte... Es geht um das absolute Minimum und trotzdem wird man als schlechterer Mensch, Bittsteller oder sogar Schmarotzer dargestellt.

Ich bin seit 2.5 Jahren chronisch krank, hab Krankengeld und ALG schon durch bzw. fast. Nun habe ich mich rechtzeitig um Bürgergeld bemüht. Da hieß es dann, nö, sie können ja nicht arbeiten, also kein Anspruch. Sie müssen zur Grundsicherung. Die wiederum sind der Meinung, ich wäre nicht krank genug. Die Dame vom SOVD meinte zu mir, dass ich möglicherweise gar nichts bekommen werde. Der Rente bin ich auch zu gesund (was einfach nicht stimmt, ich bin so ziemlich maximal arbeitsunfähig). Keiner hört einem zu oder hilft.

Ich hab dann endlich einen netten Herren beim Jobcenter an der Strippe gehabt, der meinte, dass kriegen wir hin und ich solle mir keine Sorgen machen. Antrag geht mir allen Nachweisen raus, wunderbar. Dann wieder Post, es fehlen Nachweise, die ich schon geschickt hab. Ist der Dame am Telefon dann auch aufgefallen. Achja. Einen Nachweis für die 300 Euro Bargeld wurde auch gefordert. Was????? Soll ich denen ein Foto davon schicken? Ich weiß, die sind alle überarbeitet. Dann wäre es vielleicht sinnvoll, das System zu ändern.

Es wird mehr Geld dafür verwendet, die Leute von der Hilfe fern zu halten, als Hilfe für die Leute da ist. Zumindest kommt einem das so vor. Was für Probleme das für die Betroffenen macht, das kriegt man als normaler, gesunde Arbeitnehmer meistens nicht mit.

Wenn ich dann noch die verbalen Ergüsse von Merz oder Lindner in den Nachrichten ertragen muss, kommt einfach nur Hass hoch. Das will ich eigentlich nicht und trotzdem scheint es gerechtfertigt. Ich hab früher immer die Frage nach "Was hat dich radikalisiert?" in linken Foren nie wirklich verstanden geschweige denn, eine Antwort darauf gehabt. Jetzt hingegen schon.

With most firewalls, there is an option to download ip lists for blocking. There are several list I don't recall right now, that aggregate DoH services. It's not perfect, but better than nothing.

I'll always tell my partner, that the spider she just found is, in fact, referred to as Bitey.

From what I found, Lemmy is much better in this regard. I've gotten lots of helpful answers here, so give it a go! There is also a ton of tutorials on YouTube, I recommend something like this for beginners.

What's up with the prices of smaller used drives?

I'm in the marked for a used 4TB for my offsite backup. As I've recently acquired four 12TB drives (about 10000 hours and one to two years old) for 130€ each, I was optimistic. 30 to 40€ I thought. Easy.

WRONG! Used drive, failing SMART stats, 40€. Here is a new drive, no hours on it. Oh wait, it was cold storage and it's almost 8 years old. Price? 90€ (mind you, a new drive costs about 110€). Another drive has already failed, but someone wants 25€ for e-waste. No Sir, it worked fine when I used Check-Disk, please buy. Most of the decent ones are 70 to 80€, way too close to the new price. I PAID 130 FOR 12TB. These drive were almost new and under warranty. WHY DO THIS NUMBNUT WANT 80 EURO FOR A USED 4TB Drive? And what sane person doesn't put SMART data in their offerings??? I have to ask at least 50 percent of the time. Don't even get me started on those external hard drives, they were trash to begin with. I'm SO CLOSE to buying a high capacity drive, because in that segment, people

Traefik Docker Lables: Common Practice

Hej everyone. My traefik setup has been up and running for a few months now. I love it, a bit scary to switch at first, but I encourage you to look at, if you haven't. Middelwares are amazing: I mostly use it for CrowdSec and authentication. Theres two things I could use some feedback, though.

- I mostly use docker labels to setup routers in traefik. Some people only define on router (HTTP) and some both (+ HTTPS) and I did the latter.

undefined

- labels

- traefik.enable=true

- traefik.http.routers.jellyfin.entrypoints=web

- traefik.http.routers.jellyfin.rule=Host(`jellyfin.local.domain.de`)

- traefik.http.middlewares.jellyfin-https-redirect.redirectscheme.scheme=https

- traefik.http.routers.jellyfin.middlewares=jellyfin-https-redirect

- traefik.http.routers.jellyfin-secure.entrypoints=websecure

- traefik.http.routers.jellyfin-secure.rule=Host(`jellyfin.local.domain.de`)

- traefik.http.routers.jellyfin-secure.middlewares=local-whitelis

Timing of Periodic Mainteance Tasks on TrueNAS Scale

EDIT: I found something looking through the source code on Github. I couldn't find anything at first, but then I searchedfor "periodic" and found something in middelwared/main.py.

Theses tasks (see below) are executed at system start and will be re-run after method._periodic.interval seconds. Looking at the log in var/log/middelwared.log I saw, that the intervall was 86400 seconds, exactly one day. So I'm assuming that the daily execution time is set at the last system start.

I've rebooted and will report back in a day. Maybe somebody can find the file to set it manually, not in source code. That is waaaay to advanced for me.

EDIT 2:

EDIT: I was correct, the tasks are executed 24hours later. This gives at least a crude way to change their execution time: restart the machine.

Hej everyone, in the past few weeks, I've been digging my hands into TrueNAS and have since setup a nice little NAS for all my backup needs. The drives spin down when not in use, as the instance on

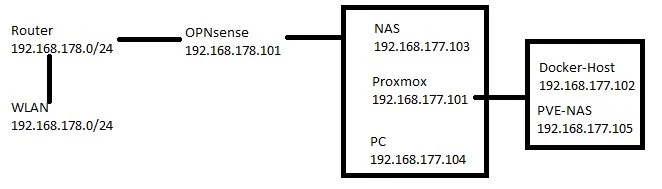

Feedback on Network Design and Proxmox VM Isolation

Network design. I started my homelab / selfhost journey about a year ago. Network design was the topic that scared me most. To challenge myself, and to learn about it, I bought myself a decent firewall box with 4 x 2.5G NICs. I installed OPNsense on it, following various guides. I setup my 3 LAN ports as a network bridge to connect my PC, NAS and server. I set the filtering to be applied between these different NICs, as to learn more about the behavior of the different services. If I want to access anything on my server from my PC, there needs to be a rule allowing it. All other trafic is blocked. This setup works great so far an I'm really happy with it.

Here is where I ran into problems. I installed Proxmox on my server and am in the process of migrating all my services from my NAS over there. I thought that all trafic from a VM in Proxmox would go this route: first VM --> OPNsense --> other VM. Then, I could apply the appropriate firewall rules. This however, doesnt seem to be the

Proxmox SMB Share not reaching full 2.5Gbit speed

EDIT: SOLUTION:

Nevermind, I am an idiot. As @ClickyMcTicker pointed out, it's the client side that is causing the trouble. His comment gave me thought so I checked my testing procedure again. Turns out that, completely by accident, everytime I copied files to the LVM-based NAS, I used the SSD on my PC as the source. In contrast, everytime I copied to the ZFS-based NAS, I used my hard drive as the source. I did that about 10 times. Everything is fine now. Maybe this can help some other dumbass like me in the futere. Thanks everyone!

Hello there.

I'm trying to setup a NAS on Proxmox. For storage, I'm using a single Samsung Evo 870 with 2TB (backups will be done anyway, no need for RAID). In order to do this, I setup a Debian 12 container, installed Cockpit and the tools needed to share via SMB. I set everything up and transfered some files: about 150mb/s with huge fluctuations. Not great, not terrible. Iperf reaches around 2.25Gbit/s, so something is off. Let's do some testing. I s

Proxmox: data storage via NAS/NFS or dedicated partition

Black friday is almost upon us and I'm itching to get some good deals on missing hardware for my setup.

My boot drive will also be VM storage and reside on two 1TB NVMe drives in a ZFS mirror. I plan on adding another SATA SSD for data storage. I can't add more storage right now, as my M90q can't be expanded easily.

Now, how would I best setup my storage? I have two ideas and could use some guidance. I want some NAS storage for documents, files, videos, backups etc. I also need storage for my VMs, namely Nextcloud and Jellyfin. I don't want to waste NVMe space, so this would go on the SATA SSD as well.

- Pass the SSD to a VM running some NAS OS (OpenMediaVault, TrueNas, simple Samba). I'd then set up different NFS/samba shares for my needs. Jellyfin or Nextcloud would rely on the NFS share for their storage needs. Is that even possible and if so, a good idea? I could easily access all files, if needed. I don't now if there would be a problem with permissions or diminished read/wri

ZFS: Should I use NAS or Enterprise/Datacenter SSDs?

I've posted a few days ago, asking how to setup my storage for Proxmox on my Lenovo M90q, which I since then settled. Or so I thought. The Lenovo has space for two NVME and one SATA SSD.

There seems to a general consensus, that you shouldn't use consumer SSDs (even NAS SSDs like WD Red) for ZFS, since there will be lots of writes which in turn will wear out the SSD fast.

Some conflicting information is out there with some saying it's fine and a few GB writes per day is okay and others warning of several TBs writes per day.

I plan on using Proxmox as a hypervisor for homelab use with one or two VMs runnning Docker, Nextcloud, Jellyfin, Arr-Stack, TubeArchivist, PiHole and such. All static data (files, videos, music) will not be stored on ZFS, just the VM images themselves.

I did some research and found a few SSDs with good write endurance (see table below) and settled on two WD Red SN700 2TB in a ZFS Mirror. Those drives have 2500TBW. For f

Storage Setup for Proxmox in Lenovo M90q (Gen 1)

Hej everyone! I’m planning on getting acquainted with Proxmox, but I’m a total noob, so please keep that in mind.

For this experiment, I’ve purchased a Lenovo M90q (Gen 1) to use as an efficient hardware basis. This system will later replace my current one. On it, I want to set up a small number of virtual machines, mainly one for Docker and one for NAS (or set up a NAS with Proxmox itself).

My main concern right now is storage. I’d like to have some redundancy built into my setup, but I am somewhat limited with the M90q. I have space for two M.2 2280 NVMe drives as well as one SATA port. There are also several options to extend this setup using either a Wi-Fi M.2 to SATA or the PCIe x8 to either SATA or NVMe. For now, I’d like to avoid adding complexity and stick with the onboard options, but I'm open to suggestions. I'd buy some new or refurbished WD Red NAS SSDs.

Given the storage options that I have, what would be a sensible setup to have some level of redundancy? I can think o

Does usage of third party youtube apps necessitate a VPN in the near future?

Greetings y'all. I've been using ways to circumvent YouTube ads for years now. I'd much rather donate to creators directly instead of using Google as a middle man, needing YouTube Premium. If even pay for premium for just a add free version, if the price wouldn't be so outrageous. I've So far used adblockers, Vanced and then Revanced.

Since the recent developments in this matter, I've setup Tubearchivist, a self hosted solution to download YouTube videos for later consumption. It mostly works great, with a few minor things that bother me but I highly recommend it. ReVanced also still works, but nobody knows for how long.

The question now is, if I should use a VPN to obscure my identity to Google. I don't know if I'm being paranoid here but I wouldn't put it past Google to block my account, if they see YouTube traffic for my IP address and no served ads. Revanced even uses my main Google account, so not that far fetched.

So far, or at least to my knowledge, Google has never done thi

How to organize docker volumes into subdirectories using compose

Hei there. I've read that it's best practice to use docker volumes to store persistent container data (such as config, files) instead of using bindmount. So far, I've only used the latter and would like to change this.

From what I've read, all volumes are stored in var/lib/docker/volumes. I also understood, that a volume is basically a subdirectory in that path.

I'd like to keep things organized and would like the volumes of my containers to be stored in subdirectories for each stack in docker compose, e.g.

volumes/arr/qbit /arr/gluetun /nextcloud/nextcloud /nextcloud/database

Is this possible using compose?

Another noob question: is there any disadvantage to using the default network docker creates for each stack/container?

checking for ip leaks using Docker, Gluetun and qBittorrent

Hej everyone.

Until now I've used a linux install and vpn software (airvpn and eddie) when sailing the high seas. While this works well enough, there is always room for improvement.

I am in the process of setting up a docker stack which so far contains gluetun/airvpn and qbittorrent. Here is my compose file:

undefined

version: "3"

services:

gluetun:

image: qmcgaw/gluetun

container_name: gluetun

cap_add:

- NET_ADMIN

volumes:

- /appdata/gluetun:/gluetun

environment:

- VPN_SERVICE_PROVIDER=airvpn

- VPN_TYPE=wireguard

- WIREGUARD_PRIVATE_KEY=

- WIREGUARD_PRESHARED_KEY=

- WIREGUARD_ADDRESSES=10.188.90.221/32,fd7d:76ee:e68f:a993:63b2:6cc0:fe82:614b/128

- SERVER_COUNTRIES=

- FIREWALL_VPN_INPUT_PORTS=

ports:

- 8070:8070/tcp

- 60858:60858/tcp

- 60858:60858/udp

restart: unless-stopped

qbittorrent:

image: lscr.io/linuxserver/qbittorrent:latest

container_name: qbittorrent

network_mod